A few years ago, when we first got an Alexa-bot, I wanted to do a little test. My daughters were about 9 and 5 at the time, and I wanted to see how they reacted to it. I showed them how to give the cylinder its basic commands, playing their favorite music and telling us what the weather would be for the day. They picked it up right away, although the 5-year-old’s higher voice didn’t always catch the robot’s ear. But, more importantly, I noticed something that I always found very telling, even if I can’t put my finger on what it means…

I made sure to refer to Alexa in a generic way, “it.” My daughters immediately, called it “her.” It’s not a leap, probably just anthropomorphizing based on the default voice, however I was struck by how easily and quickly it happened. They didn’t have any hesitation in referring to this black plastic cylinder in the same fashion as a human being.

Who’s there?

This has long left me curious about the potential for our interactions with AI. IVRs have long been commonplace and chat bots are facilitating an increasingly more robust interaction between companies and their customers. Every call that starts with “You have reached the [company] automated system” or IKEA’s “Anna,” the prototypically Swedish personality of their chat bot. Something I think I took for granted was the notion that interacting with a robot could be incredibly helpful and efficient, but I took for granted the idea that I would know I was doing it. Then this appeared…

Last May, Google’s CEO demonstrated how Duplex AI takes a command from a person “make me a haircut appointment on Tuesday morning anytime between 10 and 12,” and then calls a hair salon and sets up the appointment by using a lifelike voice and interacting with a person. The crowd goes wild.

I wasn’t a fan.

What I saw was a non-consensual interaction with an artificial intelligence system. It was, at the least, rude. For example, when someone speaks on the phone, isn’t is customary for them to identify themself? If you take a call from someone and they don’t identify themselves, aren’t you suspicious? And if the woman running the hair salon were to ask, “to whom am I speaking?” how would the Googlebot answer?

We didn’t get to find out in that clip, and these are knowable and addressable problems, but it made me uneasy that without this customary courtesy in place, the engineers in attendance still considered it a triumph.

This is not to take away from the truly remarkable technical achievement this phone call represents, but it reaffirms the stance I have had on AI for a long time:

I am not worried about the emergence of AI, I am worried about the implementation of AI in a systemic technical culture that moves fast, and with a certain ethical carelessness.

Unclear anxiety

Among designers, I have been apart of conversations that are a bit anxious about the future of AI from multiple directions.

- So are we designing conversations with robots?

- Will a robot take my design job?

I can confess: I have has some of this same anxiety once in a while and a lot of it comes from a lack of knowledge. Reading about AI is somewhere between a deep dive into a technical black hole and an over-cologned sales pitch about the value it will deliver.

Getting some clarity

I had the pleasure of attending a workshop at the ServiceNow office examining the still-to-be defined future of AI’s impact on the design industry. The workshop was primarily run by Cody Frew a Sr. Manager of User Experience Design for ServiceNow’s Platform Tools, and Laura Coburn, a Sr. UX/UI Designer for ServiceNow’s Vulnerability Management Product.

It was a pretty interesting session! They covered a little history on AI, where it came from, where it is today, and where it’s headed.

In a light summary, we looked at the history of AI’s emergence which is full of ups and downs. Then understanding AI as three things…

- Artificial intelligence, the interactions that act like people

- Machine learning, the ability for machines to identify patterns in ways people can understand

- Deep learning, the ability for machines to develop insight into situations, think: robots that win at chess or go

(It turns out, there is a fourth layer, neural networks, but that’s a conversation for another day.)

Then the conversation shifted some of the ways that AI is already percolating into the design community and processes. For instance, AirBnB’s sketch-to-code uses computer vision to translate sketches from a whiteboard into components in their design library. Netflix uses some really complicated and really fascinating algorithm tech to generate all those placeholder images that they then test a zillion times over to entice you to click on them. To some extent, Netflix designers would shift roles. Instead of mocking up title cards, they would allot more time to evaluating and tuning the results of the algorithm’s output while, in a new way, partnering with the tech, to introduce new visual concepts back into the scope of the algorithm. When machine learning merges with high-speed automation and human creativity, the role of the designer can shift.

So where else will there be automation? And where will the automation affect people’s jobs?

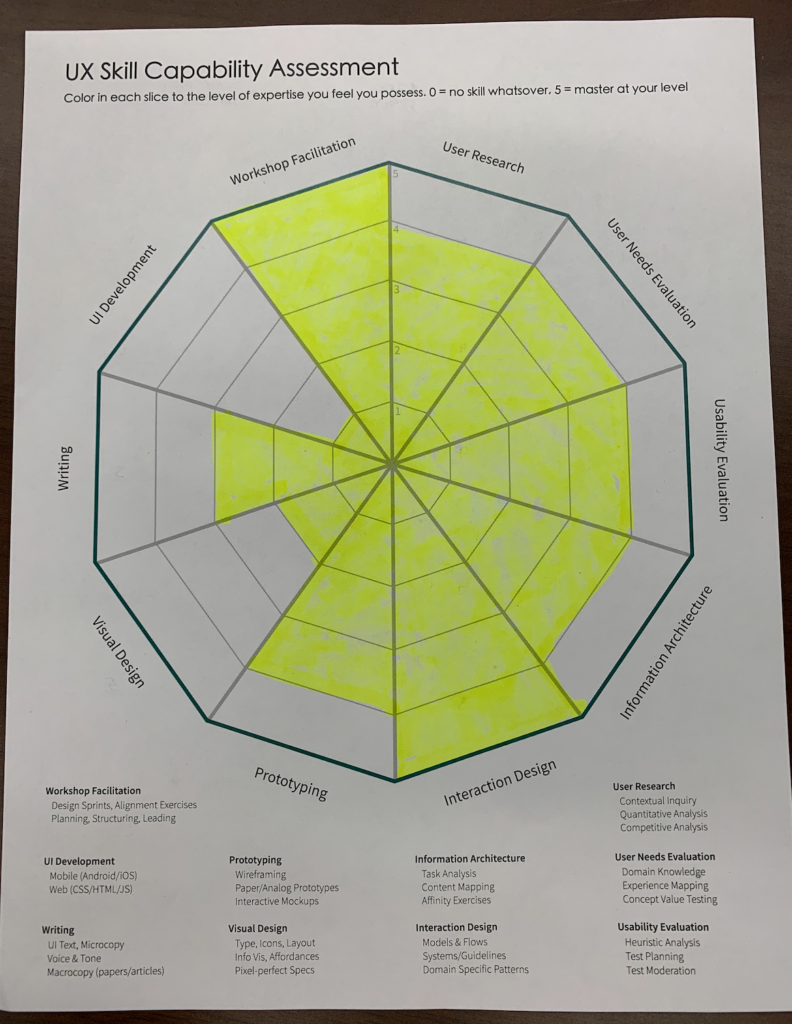

The workshop guided us through a skills assessment, rating ourselves on a scale of 1-5 in various different design skill areas, each with specific tasks or areas of knowledge. We filled in a diagram, 1 a the center, 5 on the perimeter, creating a spider graph (or RADAR graph or whatever you want to call it).

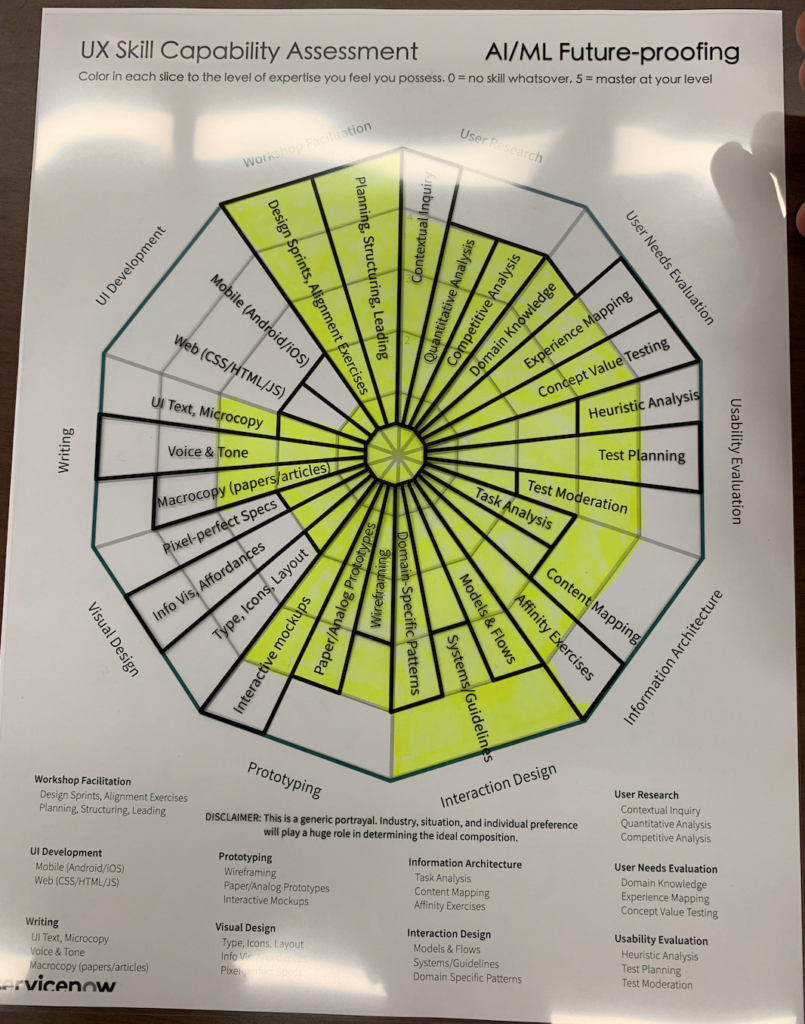

Once our self-assessments were ready, the speakers passed out some delightfully retro transparencies to lay over the skills assessment. The transparency represented how each area of knowledge is split into specific capabilities, and how each skill capability presumably stacks up in a world where AI has permeated the tools and skills of designers.

In short, the future state of design is mostly focused on wrangling people through workshops, research. That felt good for me. Interaction design, prototyping, and writing would go through some realignment, which I found interesting.

There was a fair amount of conversation about the reduced need for pixel-perfect specs and front end web dev, identifying that the bots will take this over in spades, but that the skillset will shift towards information visualization, something that is still nuanced. There were some visual designers and front end developers in the room that didn’t seem happy about that.

Does it change the process?

This workshop left me to evaluate how the design process would change with the introduction of AI. Naturally, there’s an impact, but where? Here are some hypothetical high-level areas where the design process could be affected by AI in the near future.

Design research: Participant recruitment could be made more efficient by using AI to identify candidates for participation in research. ML would identify behaviors across products through the deep analysis of retargeting tracking and other data. Once consenting participants are identified, they could opt in to having their smartphone collect ambient data, communicate with other enabled devices, forming anonymized webs of tracked behavior data. Behavior models could be augmented to create norms of probably interaction based on user decisions in situations across different media, previously untracked.

In addition to participant engagement, existing customer contact could be mined at new levels. For instance, natural language processing could cull through recordings of customer service calls, revealing trends and attitudinal metrics. Currently, customer service reps have a few seconds to give each call a single disposition code, however some data always fall through the cracks since a call can cover multiple topics. Calls could be more accurately re-dispositioned, rated in tone, and more accurately tied to business outcomes.

Problem definition and visualization: Based on trends found when modeling user behavior data a system that could identify user personas and their interactions and ultimately identify where obstacles may be causing problems for those personas. Drafting a user journey could be automated or accelerated by a smart visualization tool that is able to layer multiple channels of interactions into a set diagramatic visualization language A sketched whiteboard of a user journey or other workflow could be translated using computer vision and digitized into a workflow diagram (sort of like Lucidcharts on steroids)

Prototyping: Sketched UI designs could be realized against a fixed development framework, like Google Material. This means a designer could work with stakeholders to rapidly sketch options for their product challenges, while turning them into developed prototypes. Additional gestures or visual shorthand would be introduced to program interactions into the prototype. Once the prototype is in place, it could be ‘dry fit’ against the behavior models culled through ML, showing the likelihood that a user completes a task as designed.

Ethics: Many discussing the introduction of AI into the design and technology fields raise ethical concerns, but few are proposing solutions. I see two key areas for improvement.

First, with an advanced understanding of criminal and unethical behavior data, create an algorithm or other programmatic method that can be used to automatically QA a product for potential abuse and systematic biases of the humans and algorithms involved. Use AI to protect against the risk emerging in AI.

Second, reaffirm human understanding within the design process, to reality-check design work each step of the way. Designers (and all members of the product development disciplines) will need to incorporate a new question into their acceptance criteria and do so further upstream, repeating the question throughout their process: How can someone use this product for harm? Our collective ingenuity often outsmarts the systems we put into place, but our vigilance can overcome it.

We’ll see

There are many more areas that have potential to be augmented and accelerated by AI. More importantly, just writing the description above, I was immediately stricken by how potentially invasive it could be, allowing private entities like corporations access to incredible amounts of data, with the ability to tie that data together in ways never before imagined. At a minimum, when developing products using AI, we simply must inform people that we will be using this technology, that we will be combing their data in new ways and connecting it to other data. Informed consent will be critical as experimentation will become increasingly consequential. Checking and rechecking our work against negative unintended outcomes will become just as important as conceptualizing a new product idea.

It is also important to understand that these abilities will not be evenly distributed in the world. Some companies, people, governments, or communities will have access to remarkable power of artificial intelligence. I am not confident that all of those early innovators will be sensible in implementing this technology. Hopefully, we can support each other in building out our own understanding as a community.

My kids, who didn’t hesitate to anthropomorphize a robotic cylinder, are the first generational wave of pioneers in this new frontier that we will be building for them. Hopefully they are the first recipients of a understanding, and not the first wave of victims of our hubris.